by Ken Kostel, Director of Research Communications, Woods Hole Oceanographic Institution

By now, just about everyone is aware of, and in some way impacted by, the Global Positioning System, or GPS. But the satellites that allow us to find our location almost anywhere on the planet to within inches is limited to just that — this planet. It’s also only useful above water, which we’ll get to in a moment.

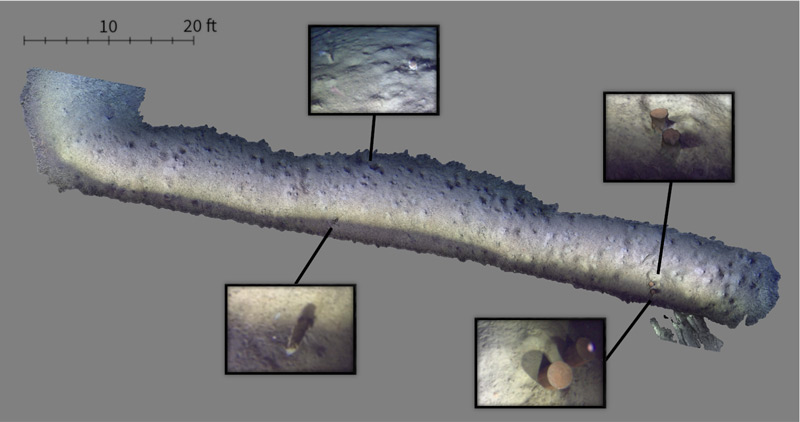

Photo reconstruction of an early Orpheus test dive. Image courtesy of ©Woods Hole Oceanographic Institution. Download image (jpg, 173 kb).

The lack of GPS on Mars forced NASA engineers to come up with a new method of wayfinding to support critical activities during the current Mars 2020 mission. Instead of signals from satellites in orbit, they used features on the surface of the planet as the basis of a vision-based system known as Terrain Relative Navigation.

During descent and landing, the spacecraft carrying the rover Perseverance and helicopter Ingenuity had to decelerate from 18,000 miles per hour to a near hover in what mission planners called “seven minutes of terror.” In that time, the lander needed to identify its landing site and, based on increasingly detailed imagery as the ground approached, make split-second decisions about where it would ultimately touch down — all without human intervention. The TRN system known as the Lander Vision System helped establish the lander’s location and then identified obstacles to avoid within the chosen landing site.

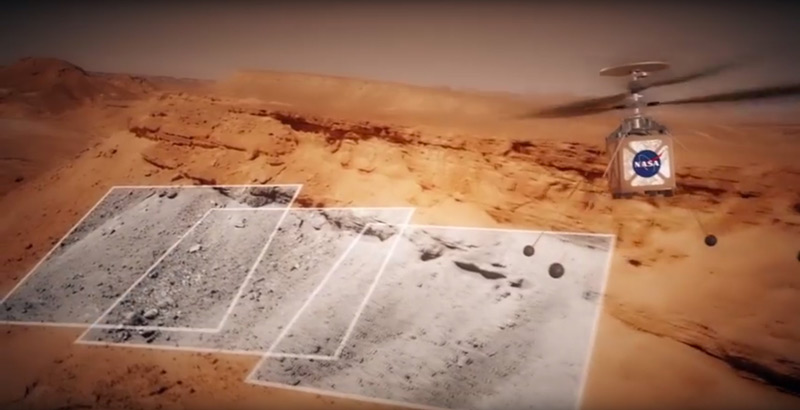

The Mars helicopter Ingenuity will collect images as it flies, partly to navigate the surface of the planet. The Mars Helicopter will attempt to take images of the Martian surface. Image courtesy of NASA/JPL-Caltech. Download image (jpg, 364 kb).

More recently, another TRN-based system has been used in the Mars helicopter Ingenuity. Again, without GPS to guide it, Ingenuity uses a camera to navigate the surface. As Ingenuity flies, it tracks features from high-resolution photos it takes to figure out where it’s going.

Which brings us closer to home. GPS works almost everywhere on the surface of Earth, except far northern and southern latitudes. It also doesn’t work on the two-thirds of the planet’s surface covered in salt water from the moment you dip even a few inches beneath the water’s surface. That makes it difficult for autonomous underwater vehicles (AUVs) like Orpheus and Eurydice, which are designed to travel more than six miles below the surface.

In the past, we’ve used the GPS position of the ship and an acoustic communication system known as ultra-short baseline (USBL) navigation to establish the position of a vehicle underwater. This has worked well in the past, but it is prone to errors driven by changes in the speed of sound underwater due to changes in temperature and salinity. This is particularly important when navigating close to the seafloor, where vehicle-based sensors like down-looking sonar and Doppler velocity logs help, but can also be bulky and tax an AUV’s limited power. A vision-based system like the one being used in Orpheus and Eurydice will eventually eliminate the need for both, and greatly improve the safety, accuracy, and duration of hadal missions.

NASA engineer Russell Smith and Woods Hole Oceanographic Institution engineer Molly Curran calibrate the cameras on Orpheus prior to a 2018 test dive. Photo by Ken Kostel ©Woods Hole Oceanographic Institution. Download image (jpg, 2.2 MB).

While this trip won’t see the vehicles navigating using a pre-existing map, it will see them build maps of the seafloor using their TRN cameras. The more maps we make with overlapping fields of coverage, the better our ability to navigate the seafloor becomes and the more we will learn about the blue planet we call home.